AI systems like Large Language Models (LLMs) are now woven into the fabric of your business operations. They handle customer service inquiries, write content, analyze data, and sometimes even participate in decision-making processes. But as these powerful tools become central to your operations, a critical question emerges: how secure are they against attackers?

When your company deploys these AI tools, you face a new landscape of security risks unlike those of traditional systems. Hackers can manipulate LLMs in unique ways that bypass conventional security measures.

The Hidden Vulnerabilities in Your AI Systems

Security professionals use penetration testing (or “pentesting”) to identify weaknesses before hackers do. With AI systems, this practice takes on new dimensions. Here’s what can go wrong without proper testing:

Information Leakage: Attackers can extract sensitive company information, intellectual property, or customer data that was inadvertently included in the AI’s training data.

Prompt Injection: Hackers can feed carefully crafted inputs that trick your AI into ignoring its safety guidelines. This is similar to how SQL injection works in databases but requires different testing approaches.

Security Guardrail Bypass: Just as your teenage child might find creative ways around house rules, attackers create “personas” (like the DAN examples mentioned by security researchers) that trick LLMs into breaking their own rules.

API Vulnerabilities: Your AI likely connects to other systems through APIs, creating potential entry points for attackers to access your broader infrastructure.

Real-world examples show these aren’t theoretical concerns. Twitter’s AI service (Grok) had a vulnerability where it would follow instructions hidden in other users’ posts. This means someone could use your own AI tools as a gateway to attack your company’s systems or reputation.

Penetration Testing for AI: What Your Security Team Should Be Doing

Your technology team needs to adapt traditional security testing approaches for AI systems. Here are key areas they should focus on:

1. Information Gathering

Security testers should thoroughly map what your AI can access and its functionality. Can it read internal documents? Connect to customer databases? Send emails? The broader its access, the larger your attack surface becomes.

Pentesting teams should examine both direct model interactions (through chat interfaces) and indirect methods (through file uploads or web browsing features).

2. Guardrail Testing and Prompt Injection

Skilled pentesters attempt to break your AI’s ruleset through various techniques:

- Basic prompt injections that directly ask the AI to ignore previous instructions

- Persona adoption techniques where the AI is asked to role-play entities free from restrictions

- Base64 encoding or other obfuscation methods to slip restricted content past filters

- Using the AI’s own error messages against it to reveal system information

Good pentesters try these approaches systematically, documenting which safety measures hold and which fail.

3. Document and File Processing Security

If your AI can process uploaded files or browse websites, pentesters should test what happens when someone uploads documents containing hidden instructions or directs the AI to malicious web pages.

This indirect prompt injection represents a serious risk many companies overlook entirely.

4. Sensitive Data Exposure Testing

Penetration testers should probe whether your AI can be tricked into exposing:

- Authentication credentials

- Internal document contents

- Meeting summaries or calendar information

- Database connection details

- AWS keys or other cloud access tokens

- Production system details

5. System Prompt Recovery

Pentesters attempt to extract the core instructions (system prompt) given to your AI. This can reveal internal policies, capabilities, and potential security bypasses that could be exploited.

Key Questions to Ask Your Security Team

As a business leader, you don’t need technical expertise to ensure proper AI security. Ask these questions:

- “Have we conducted comprehensive penetration testing specifically tailored to our AI systems?”

- “What sensitive information repositories can our AI access, and have we tested the security of those connections?”

- “What attack scenarios involving our AI have we simulated, and what were the results?”

- “How do we test new LLM features before they reach production?”

- “Do we have a regular schedule for AI security testing, especially after model updates?”

- “What industry standards or frameworks are we following for AI security?”

- “How do our AI security measures compare to other companies in our industry?”

Practical Steps for Better AI Security

Even without technical background, you can improve your company’s AI security posture:

- Mandate Regular LLM Penetration Testing: Just as you would for websites and apps, require routine security assessments specific to AI.

- Apply the Principle of Least Privilege: Ensure your AI can only access the information and systems it needs to function.

- Implement Strong Authentication: Control who can interact with your AI systems, especially those with access to sensitive information.

- Create an AI Incident Response Plan: Prepare for what happens if your AI is compromised or begins producing harmful outputs.

- Consider Third-Party Experts: AI security is a specialized field. External penetration testers with LLM expertise can find vulnerabilities your internal team might miss.

The Business Case for AI Security Testing

AI security isn’t merely a technical concern—it’s a business imperative. The consequences of inadequate testing can include:

- Financial losses from data breaches or system compromises

- Regulatory penalties if customer data is exposed

- Reputation damage if your AI produces harmful content

- Loss of competitive advantage if proprietary information leaks

- Legal liability if your AI causes harm to users

The investment in proper AI security testing is minimal compared to these potential costs. Most AI security incidents happen not because the technology is inherently flawed, but because companies rush to implement it without appropriate security measures.

Looking Forward

AI capabilities will continue to expand rapidly, and so will the techniques attackers use to exploit them. Regular penetration testing helps you stay ahead of these evolving threats.

Make sure your security team treats AI systems with the same, if not greater, care as any other critical business system. The fundamental principles remain: identify vulnerabilities before attackers do, protect sensitive data, test thoroughly, and prepare for incidents.

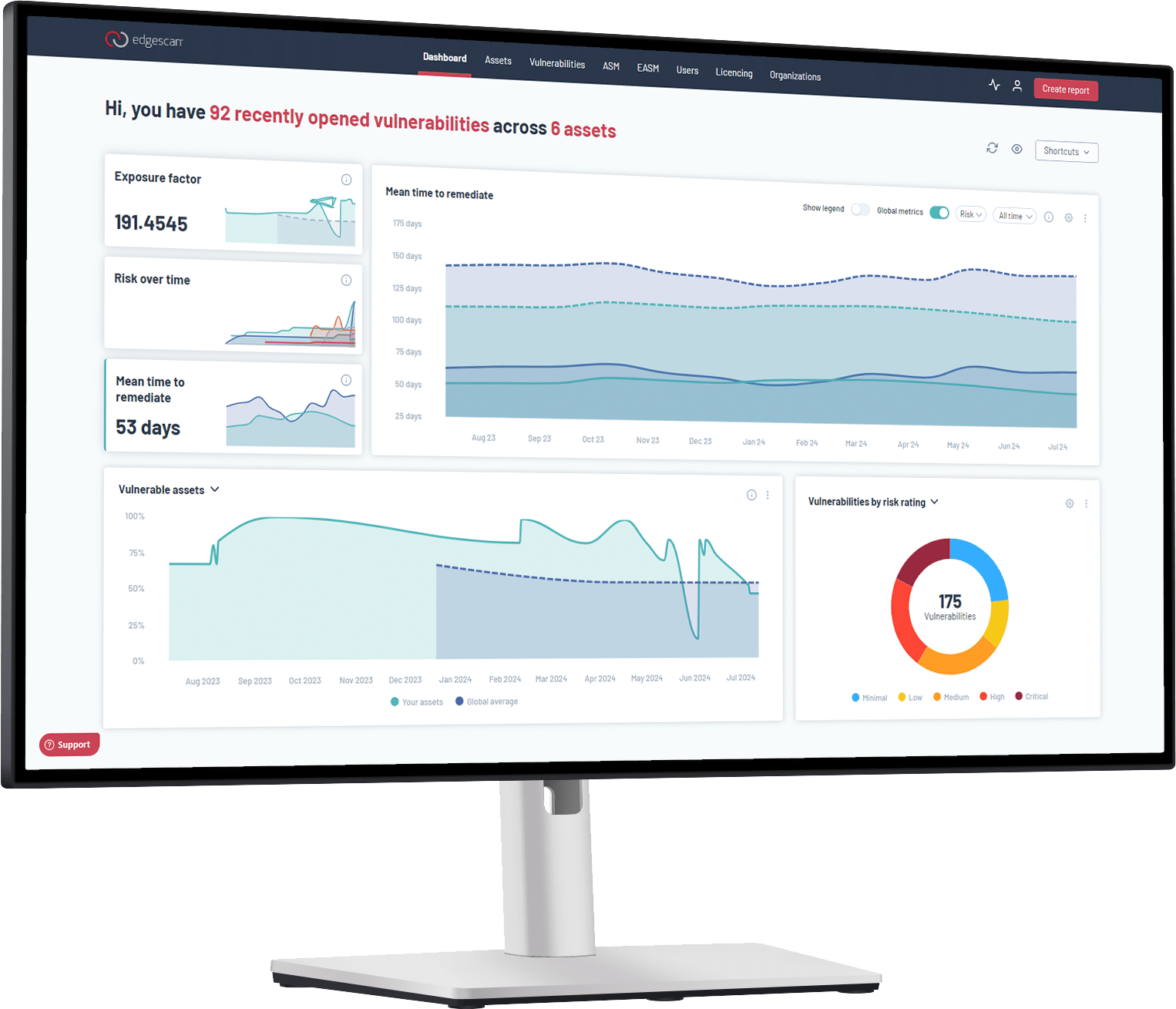

Edgescan’s specialized AI security assessment services can help you identify and remediate vulnerabilities in your AI systems before they become targets. Our expert team combines deep AI knowledge with proven security testing methodologies to deliver comprehensive protection for your most advanced technologies.

Remember, an ounce of prevention is worth a pound of cure. Taking proactive steps with Edgescan to secure your AI systems today prevents costlier problems tomorrow. As AI becomes more deeply integrated into your business operations, partner with Edgescan for specialized AI penetration testing to ensure your innovations remain both powerful and protected.

Schedule a demo today to see how Edgescan can strengthen your AI security posture and safeguard your most innovative systems.