False positives in cybersecurity waste time and money. They happen when security tools flag normal activities as threats. This creates big problems for security teams who need to focus on real issues.

Think about it. You get hundreds of alerts. You check them one by one. Many turn out to be nothing. Meanwhile, actual threats might slip through. This isn’t just annoying. It’s dangerous.

The Foundation Matters

Top of the funnel matters most. If you start with bad data, everything that follows is compromised.

You can add all the fancy threat intelligence and metadata you want. But if the original vulnerability report isn’t accurate, you’re just making the noise problem worse. All that extra information amplifies false positives instead of helping.

Accuracy isn’t just important. It’s essential.

The Real Cost of False Positives

1. Resource Drain and Wasted Time

Security teams spend too much time chasing ghosts. The 2023 Ponemon Institute study showed organizations waste about 25% of their security team’s time investigating things that aren’t real threats.

For teams already stretched thin, this means critical vulnerabilities sit unpatched longer. A security analyst who spends three hours checking a false positive could have used that time patching three critical vulnerabilities. Unlike automated tools like Nessus or Qualys that flood you with unverified alerts, human-validated results focus your team on what really matters.

2. Increased Operational Costs

False positives cost real money. Each alert needs investigation. That means people, computing resources, and sometimes expensive consultants.

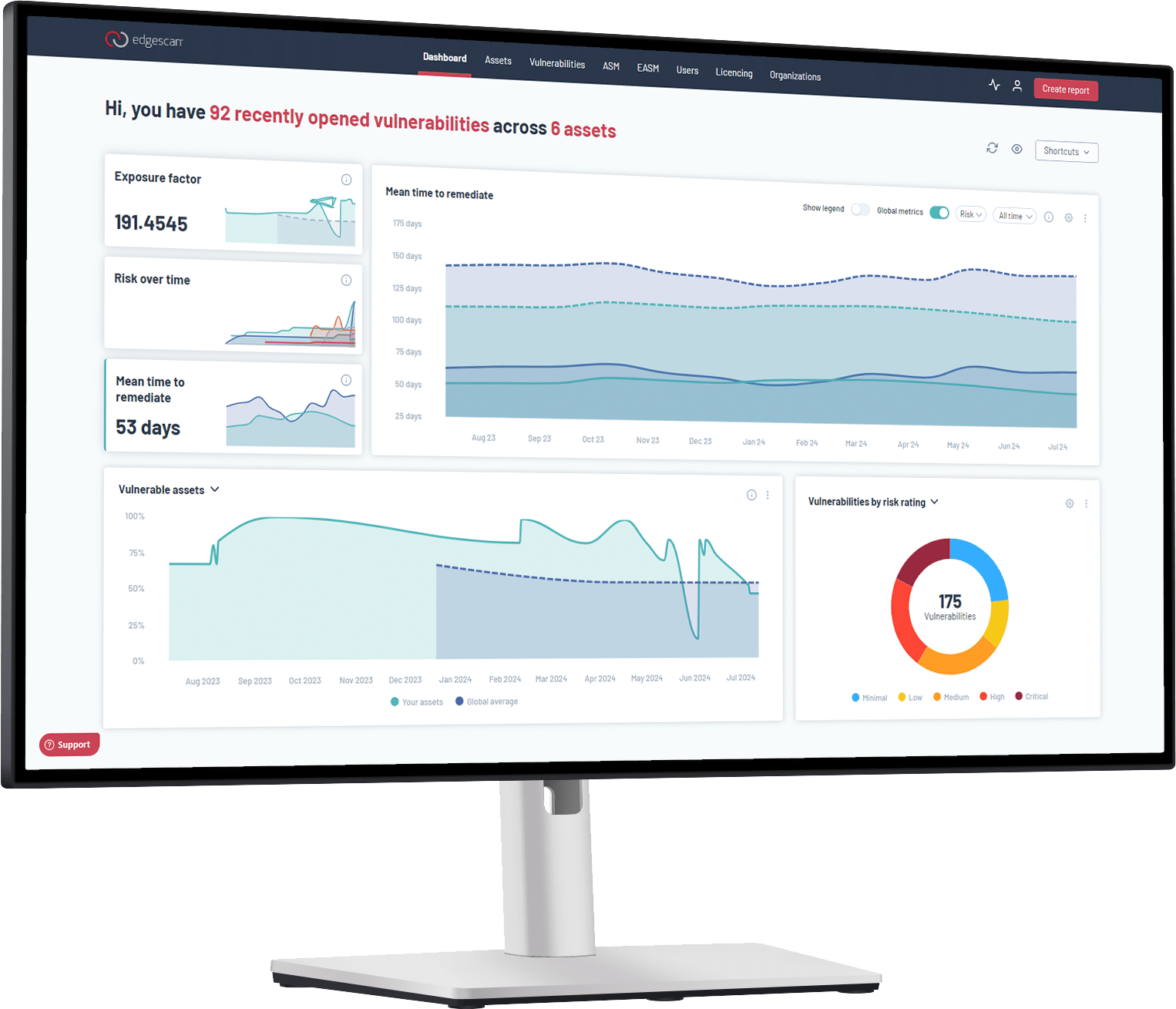

A medium-sized company might spend $150,000 yearly just handling false positives. Their mean time to remediation (MTTR) grows longer as teams chase phantom issues while actual vulnerabilities remain open. The difference between automated-only tools and expert-validated approaches can save thousands of hours and dollars annually.

3. Alert Fatigue and Desensitization

“The security tool that cried wolf” is a real problem. When most alerts are false, teams start ignoring them. The 2024 ESG report found 34% of security professionals simply ignore certain alerts because they don’t trust them.

This creates a dangerous situation. When a real threat comes in, it might be overlooked. One security team I worked with had so many false positives they created a “probably ignore” folder. They later found a critical vulnerability sitting there for weeks. Human-verified approaches ensure alerts deserve attention.

4. Erosion of Trust in Security Tools

Teams stop trusting tools that lie to them repeatedly. They question results and may avoid using expensive security solutions altogether.

Take scanning tools like Burp, Rapid 7, or Qualys. They’re powerful but need constant human verification. Without it, results vary wildly. One client told me they only trust about 40% of their vulnerability scanner results. A high accuracy rate (92% automated validation with 8% expert verification) builds trust that the results are worth acting on.

5. Delayed Response to Real Threats

While teams handle false alarms, real threats wait. The 2025 Vulnerability Statistics Report showed high-risk vulnerabilities took 60% longer to fix when mixed with false positives.

Attackers don’t wait. They exploit vulnerabilities quickly, especially in fast-moving DevOps environments. When your team finds a SQL injection vulnerability three weeks late because they were busy with false positives, that’s three weeks attackers could have accessed your customer data.

6. Impact on Compliance and Audits

False positives create compliance headaches. Organizations must document and justify every reported vulnerability, even fake ones. For industries like finance or healthcare under PCI DSS or HIPAA regulations, this doubles the paperwork.

During one audit, a client had to explain 47 “critical vulnerabilities” that weren’t actually vulnerabilities at all. They spent three days on documentation that added no security value. PCI-approved scanning with validated results eliminates this wasted effort.

7. Negative Impact on DevOps and Business Agility

False positives break development workflows. They stop code releases and frustrate developers who must fix “problems” that don’t exist. The 2024 Forrester study found 40% of DevOps teams see false positives as a major barrier to security integration.

One development team told me they disabled certain security checks entirely because false positives delayed releases too often. This created actual security gaps. Accurate reporting that integrates smoothly with CI/CD pipelines keeps development moving without sacrificing security.

The Value of a False-Positive-Free Approach

The false positive problem needs a hybrid approach. Technology alone isn’t enough. Neither is human review alone. You need both working together.

Based on reviews from actual users (4.7/5 on Gartner Peer Insights), combining advanced analytics with expert validation eliminates almost all false positives. Security teams can focus on real issues instead of chasing shadows.

Companies using the old approach rely too heavily on automation. They produce lots of alerts but little confidence. Expert validation delivers intelligence you can act on. This cuts alert fatigue, saves money, and speeds up fixing real problems.

The Bottom Line

False positives waste resources, cause alert fatigue, delay threat response, destroy trust in security tools, complicate compliance, and disrupt business. They create security gaps by distracting teams from real vulnerabilities.

A false-positive-free platform with human validation and full-stack coverage solves these problems. It offers a more efficient and reliable solution than traditional automated scanning alone.