Enterprise security teams are drowning. While they discover vulnerabilities faster than ever, they’re falling further behind on actually fixing them. The numbers tell a stark story: according to Edgescan’s 2025 Vulnerability Statistics Report, large enterprises maintain vulnerability backlogs where 45.4% of discovered vulnerabilities remain unpatched after 12 months, with 17.4% being high or critical severity.

This isn’t just a technical problem – it’s a business crisis hiding in plain sight.

The Accumulation Problem

The vulnerability backlog crisis stems from a fundamental mismatch between discovery and remediation rates. Organizations have become efficient at finding security flaws but struggle with the complex process of actually fixing them.

The data reveals the scope of the challenge:

- Average remediation time for applications: 74.3 days

- Average remediation time for network/infrastructure: 54.8 days

- Percentage of vulnerabilities closed within 6 months: 56%

- Critical and high severity vulnerabilities still open after 12 months: 17.4%

Meanwhile, the threat landscape continues accelerating. In 2024 alone, 40,009 new CVEs were published – a record-breaking year for vulnerability discoveries. With 768 CVEs publicly reported as exploited for the first time, the window between discovery and exploitation continues shrinking.

Why Traditional Testing Makes the Problem Worse

Counterintuitively, many traditional security testing approaches actually contribute to vulnerability backlogs rather than reducing them. Here’s how:

The Quarterly Flood

Traditional penetration testing operates on quarterly or annual cycles, delivering large batches of findings all at once. A typical enterprise pen test might identify 50-100 vulnerabilities across multiple applications and systems. Security teams receive this information as a static PDF report and must manually:

- Validate each finding

- Prioritize based on business impact

- Coordinate remediation across multiple teams

- Track progress across different systems

- Manage competing priorities and resource constraints

This creates a “feast or famine” cycle where teams are overwhelmed by findings for weeks, then operate blind until the next scheduled assessment.

The False Positive Tax

Traditional scanning tools generate significant noise through false positives, forcing security teams to spend valuable time on triage rather than remediation. When teams lose confidence in their tools’ accuracy, they develop defensive behaviors:

- Over-validating obvious vulnerabilities

- Delaying remediation while conducting additional verification

- Ignoring lower-severity findings that might be legitimate

- Losing focus on high-priority items amid the noise

The Context Gap

Point-in-time assessments provide limited context for prioritization. A SQL injection vulnerability discovered six months ago might be far less critical than a new authentication bypass in a customer-facing application, but traditional reporting doesn’t provide the dynamic risk context needed for intelligent prioritization.

The Real Cost of Vulnerability Backlogs

Vulnerability backlogs aren’t just security metrics – they represent real business risk and operational costs:

Increased Attack Surface

Every unpatched vulnerability represents a potential entry point for attackers. With the average time to exploit a vulnerability being just 15 days, unpatched vulnerabilities older than a month are essentially open doors for threat actors.

Compliance Exposure

Regulatory frameworks increasingly expect organizations to demonstrate timely vulnerability remediation. Long-standing vulnerability backlogs can trigger compliance violations and associated penalties.

Team Burnout

Security professionals report high levels of stress and burnout, partly due to the overwhelming nature of vulnerability backlogs. When teams can’t make meaningful progress despite working hard, morale suffers and turnover increases.

Resource Misallocation

Large backlogs make it difficult to focus on what matters most. Teams spend time on low-risk vulnerabilities while critical issues might remain unaddressed due to poor visibility and prioritization.

The Prioritization Problem

Not all vulnerabilities are created equal, but traditional approaches often treat them as if they are. The data shows significant variation in actual risk:

By Exploit Probability (EPSS Score):

- 13% of vulnerabilities have >80% probability of exploitation

- 16% have >60% probability of exploitation

- 26% have >10% probability of exploitation

By Edgescan eXposure Factor (EXF):

- 26% score >80 (highest priority)

- 34% score >50 (high priority)

- 39% score >10 (medium priority)

Organizations lacking sophisticated prioritization frameworks often work through backlogs chronologically or by CVSS score alone – approaches that don’t reflect actual business risk or likelihood of exploitation.

Breaking the Backlog Cycle

The solution requires fundamentally changing how organizations approach vulnerability management:

Continuous Assessment vs. Periodic Testing

Instead of quarterly vulnerability dumps, continuous assessment provides steady streams of validated findings that teams can address as part of regular development cycles. This approach:

- Distributes remediation work evenly across time

- Provides immediate feedback on fixes

- Reduces the overwhelming nature of large batches

- Enables integration with development workflows

Validated Intelligence vs. Raw Scanner Output

The most effective approach combines automated discovery with expert validation to eliminate false positives before they reach development teams. Edgescan’s data shows this hybrid model achieves 92% automation while requiring only 8% human validation for complex scenarios.

Dynamic Risk Scoring vs. Static Ratings

Modern vulnerability management requires dynamic risk scoring that considers:

- Current exploit availability and likelihood

- Business criticality of affected systems

- Compensating controls and environmental factors

- Threat intelligence and active exploitation data

Practical Remediation Strategies

Organizations successfully reducing vulnerability backlogs implement several key practices:

Risk-Based Prioritization

Focus on vulnerabilities with high EPSS scores, presence in CISA KEV catalog, or affecting business-critical systems. The data shows that vulnerabilities with EPSS scores above 0.7 have similar remediation times to lower-risk vulnerabilities, suggesting organizations aren’t effectively prioritizing based on actual exploit probability.

Integration with Development Workflows

Successful programs integrate vulnerability management with existing development processes through:

- API connections to issue tracking systems (Jira, GitHub)

- Automated ticket creation for new findings

- Integration with CI/CD pipelines for continuous validation

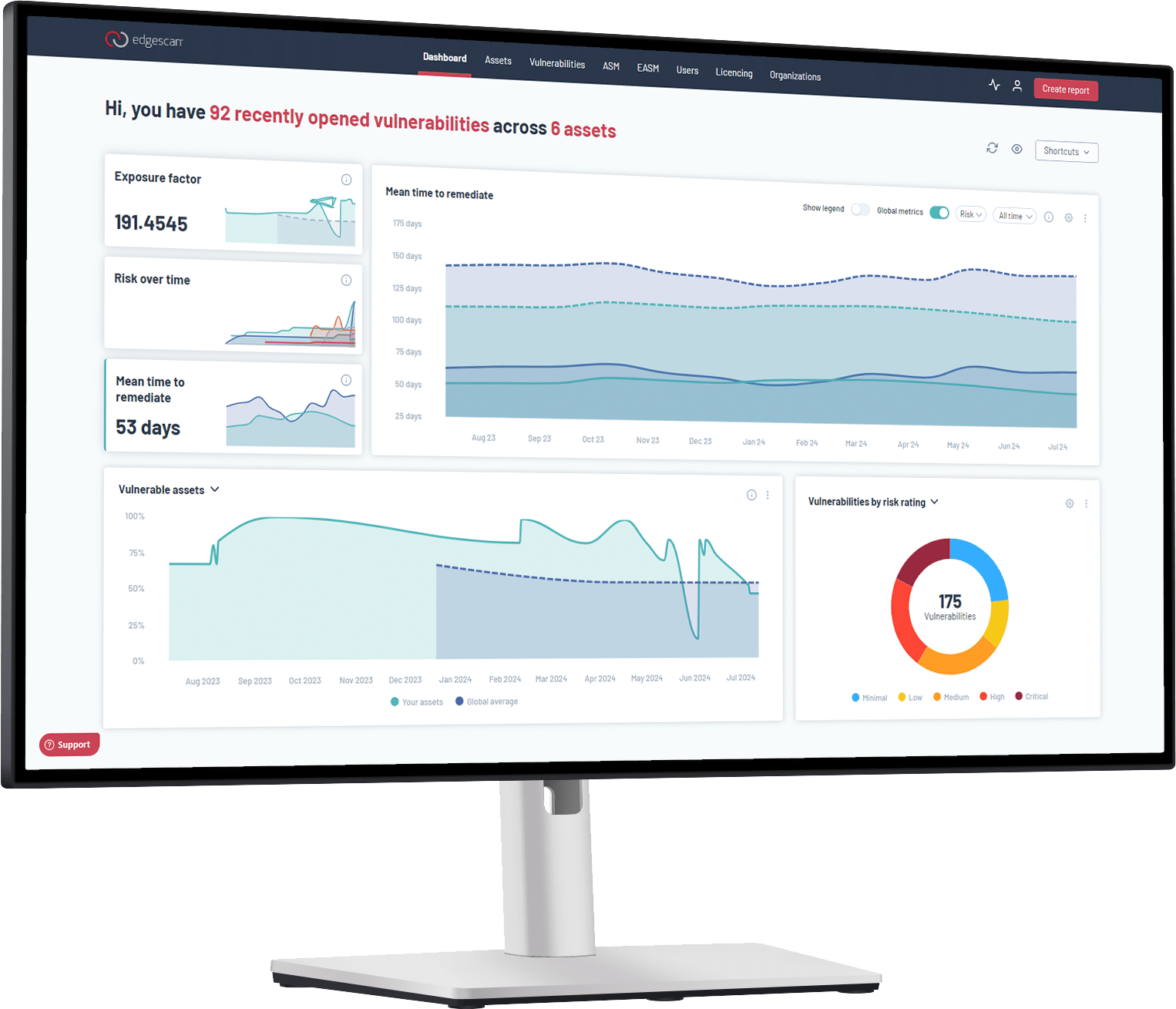

- Dashboards that provide real-time visibility into remediation progress

Unlimited Retesting

Traditional testing models charge for retesting, creating financial disincentives to validate fixes. Unlimited retesting enables teams to:

- Verify remediation immediately after implementing fixes

- Iterate on complex vulnerabilities requiring multiple attempts

- Maintain continuous validation without budget constraints

Clear Accountability

Establish clear ownership and SLAs for different vulnerability types:

- Critical vulnerabilities: 7-day remediation target

- High vulnerabilities: 30-day remediation target

- Medium vulnerabilities: 90-day remediation target

- Low vulnerabilities: Next major release cycle

Measuring Success

Effective backlog reduction requires tracking the right metrics:

Velocity Metrics:

- Mean time to remediation (MTTR) by severity level

- Percentage of vulnerabilities closed within SLA

- Trend analysis showing backlog growth or reduction over time

Quality Metrics:

- False positive rate of security findings

- Percentage of findings requiring re-work after attempted remediation

- Developer satisfaction with security tool accuracy

Business Impact Metrics:

- Reduction in overall risk exposure

- Compliance posture improvements

- Security team efficiency gains

The Path Forward

The vulnerability backlog crisis requires systematic change, not just better tools. Organizations must move from periodic, large-batch vulnerability discovery to continuous, validated assessment that integrates with development workflows.

The most successful approaches combine automated efficiency with human expertise, providing security teams with accurate, prioritized findings they can act on immediately rather than overwhelming backlogs they struggle to manage.

This transition isn’t just about security – it’s about enabling development velocity while maintaining strong security posture. When security testing becomes a continuous enabler rather than a periodic roadblock, both security and development teams benefit.

Want to learn how to eliminate vulnerability backlogs in your organization? Our comprehensive PTaaS Guide 2025 provides detailed frameworks for implementing continuous assessment programs that reduce backlogs while improving security outcomes. The guide includes specific metrics, implementation timelines, and ROI calculations from organizations that have successfully made this transition.

Download the complete PTaaS Implementation Guide and discover proven strategies for breaking the vulnerability backlog cycle.

For additional insights on modern vulnerability management, read our analysis of Why “AI Penetration Testing” Is Just Expensive Vulnerability Scanning and learn about The Hidden Cost of Slow Penetration Testing in enterprise environments.